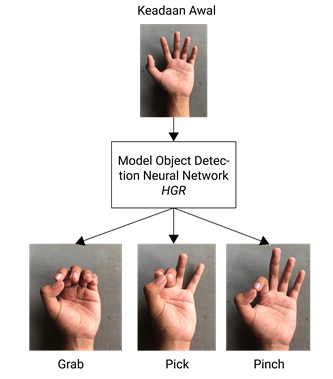

Model Object Detection Neural Network Berbasis Hand Gesture Recognition sebagai Kontrol Prostesis Tangan

Abstract

Most people with disabilities due to accident injuries are patients after an arm amputation which can cause psychological disorders and even major trauma. The urgency of hand prosthesis functionality is increasingly needed. Hand gesture recognition (HGR) can be used to control hand prostheses, judging from the similarity in shape of objects/objects that tend to make the same hand movements. This development uses three common types of movement, namely pinch, pick, and grab. Developing a neural network model capable of implementing this concept is necessary. The neural network model developed uses the YOLOV7 and YOLOV7 tiny pre-trained networks with datasets collected through the public image data scrapping method. The dataset is 317 images and 2278 object labels with a training ratio of 80:20 testing. The training process uses the Pytorch framework with 300 epochs. The results of the loss values for each epoch show that the model is trainable in the given dataset. The training results are then evaluated by evaluating number of parameters, frame per seconds (FPS) and mean average precision (mAP) using a testing dataset. The overall results show the highest evaluation metrics in the model, with YOLOV7 pretrained with parameter number of 36,9 million, FPS of 161, and mAP of 98,11%. The model has the potential to be developed and implemented as a support for the control functionality of hand prostheses.

Downloads

References

[2] P. S. Mckechnie and A. John, “Anxiety and depression following traumatic limb amputation : A systematic review,” Injury, vol. 45, no. 12, pp. 1859–1866, 2014, doi: 10.1016/j.injury.2014.09.015.

[3] Kemenkes RI, “Hasil Riset Kesehatan Dasar Tahun 2018,” Kementrian Kesehat. RI, vol. 53, no. 9, pp. 1689–1699, 2018.

[4] D. Dosen, S., Prahm, C., Amsüss, S., Vujaklija, I., & Farina, “Prosthetic Feedback Systems,” in Bionic Limb Reconstruction, Springer Nature Switzerland AG, 2021, pp. 147–170.

[5] Z. Ren, J. Yuan, J. Meng, and Z. Zhang, “Robust Part-Based Hand Gesture Recognition Using Kinect Sensor,” IEEE Trans. Multimed, vol. 15, no. 5, pp. 1–11, 2013.

[6] B. Feng, F. He, X. Wang, Y. Wu, and H. Wang, “Depth-Projection-Map-Based Bag of Contour Fragments for Robust Hand Gesture Recognition,” IEEE Trans. Hum.-Mach. Syst, vol. 47, pp. 1–13, 2016.

[7] P. K. Pisharady and M. Saerbeck, “Recent methods and databases in vision-based hand gesture recognition : A review,” Comput. Vis. Image Underst., vol. 141, pp. 152–165, 2015, doi: 10.1016/j.cviu.2015.08.004.

[8] M. Garcia, J. Cruz, C. Garza, P. DeLucia, and J. Yang, “The Effect of Object Surfaces and Shapes on Hand Grip Function for Heavy Objects,” in Proceedings of the AHFE 2018 International Conferences on Human Factors and Simulation and Digital Human Modeling and Applied Optimization, 2018, pp. 446–452.

[9] I. P. G. S. Andisana, M. Sudarma, and I. M. O. Widyantara, “Pengenalan Dan Klasifikasi Citra Tekstil Tradisional Berbasis Web Menggunakan Deteksi Tepi Canny, Local Color Histogram Dan Co-Occurrence Matrix,” Maj. Ilm. Teknol. Elektro, vol. 17, no. 3, p. 401, 2018, doi: 10.24843/mite.2018.v17i03.p15.

[10] X. Zhang, M. Shen, X. Li, and F. Feng, “A deformable CNN-based triplet model for fine-grained sketch-based image retrieval,” Pattern Recognit., vol. 125, p. 108508, 2022, doi: 10.1016/j.patcog.2021.108508.

[11] N. Dong, Y. Zhang, M. Ding, and G. H. Lee, “Incremental-DETR: Incremental Few-Shot Object Detection via Self-Supervised Learning,” 2022, [Online]. Available: http://arxiv.org/abs/2205.04042.

[12] M. Z. Alom et al., “The History Began from AlexNet: A Comprehensive Survey on Deep Learning Approaches,” 2018, [Online]. Available: http://arxiv.org/abs/1803.01164.

[13] P. Bharati and A. Pramanik, “Deep Learning Techniques—R-CNN to Mask R-CNN: A Survey,” in Advances in Intelligent Systems and Computing, 2020, vol. 999, p. 7, doi: 10.1142/S0218001402001976.

[14] C.-Y. Wang, A. Bochkovskiy, and H.-Y. M. Liao, “YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors,” 2022. [Online]. Available: http://arxiv.org/abs/2207.02696.

[15] J. Solawetz, “YOLOv7 - A Breakdown of How it Works,” Roboflow, 2022. https://blog.roboflow.com/yolov7-breakdown/ (accessed Jan. 10, 2023).

[16] S. Mittal, “A Survey on optimized implementation of deep learning models on the NVIDIA Jetson platform,” J. Syst. Archit., vol. 97, no. December 2018, pp. 428–442, 2019, doi: 10.1016/j.sysarc.2019.01.011.

[17] C. G. I. Raditya, P. A. S. Dharma, K. A. Widyatmika, I. N. Suparta, I. M. S. Yasa, and A. A. N. G. Sapteka, “Pendeteksi Penggunaan Masker Wajah dengan ESP32Cam Menggunakan OpenCV dan Tensorflow,” Maj. Ilm. Teknol. Elektro, vol. 21, no. 2, p. 155, 2022, doi: 10.24843/mite.2022.v21i02.p01.

[18] A. Schafer, G. Reis, and D. Stricker, “Comparing Controller With the Hand Gestures Pinch and Grab for Picking Up and Placing Virtual Objects,” in IEEE VR, 2022, pp. 1–2, doi: 10.3390/mti4040091.

[19] K. U. Manjari, S. Rousha, D. Sumanth, and J. Sirisha Devi, “Extractive Text Summarization from Web pages using Selenium and TF-IDF algorithm,” Proc. 4th Int. Conf. Trends Electron. Informatics, ICOEI 2020, pp. 648–652, 2020, doi: 10.1109/ICOEI48184.2020.9142938.

[20] Tzutalin, “LabelImg,” Git code, 2015. https://github.com/heartexlabs/labelImg (accessed Jan. 10, 2023).

[21] J. Zheng, H. Wu, H. Zhang, Z. Wang, and W. Xu, “Insulator-Defect Detection Algorithm Based on Improved YOLOv7,” Sensors, vol. 22, no. 22, pp. 1–23, 2022, doi: 10.3390/s22228801.

[22] K. M. Kuo, P. C. Talley, C. H. Huang, and L. C. Cheng, “Predicting hospital-acquired pneumonia among schizophrenic patients: A machine learning approach,” BMC Med. Inform. Decis. Mak., vol. 19, no. 1, pp. 1–8, 2019, doi: 10.1186/s12911-019-0792-1.

[23] S. Minaee, N. Kalchbrenner, E. Cambria, N. Nikzad, M. Chenaghlu, and J. Gao, “Deep Learning Based Text Classification: A Comprehensive Review,” ACM Comput. Surv, vol. 54, no. 3, p. Article 62, 2021, [Online]. Available: http://arxiv.org/abs/2004.03705.

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

This work is licensed under a Creative Commons Attribution 4.0 International License